The big camera manufacturers have remained pretty quiet about the rise of AI image generators like Midjourney so far, but Nikon has now broken cover, with a campaign that encourages a return to "natural intelligence" in photography.

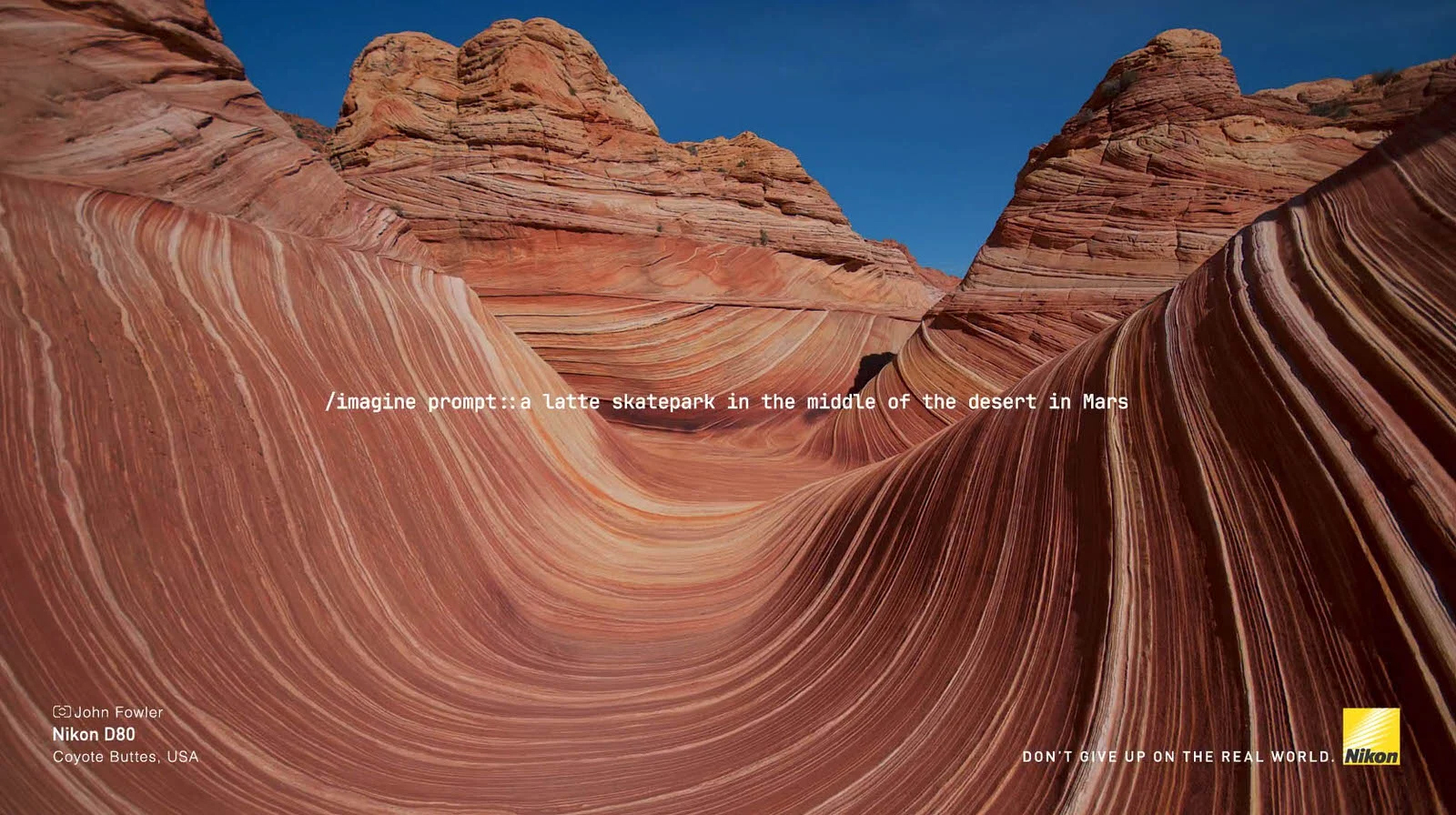

As spotted by Little Black Book and Petapixel, a clever Nikon Peru campaign shows a succession of stunning real-world photos, on which are superimposed the AI prompts that might have inspired them, had they been AI-generated.

The campaign, which features the tagline "Don't give up on the real world", has been designed to steal back the photography limelight from AI image generators, which have dominated the headlines this year – even though there are plenty of things the likes of Midjourney and Dall-E still can't do.

While it's the kind of argument you'd expect to hear from one of the world's biggest camera manufacturers – Nikon still sits third in global camera market share, behind Sony and Canon – it will also strike a chord with traditional photographers.

As Nikon Peru states in its promo video "this obsession with the artificial is making us forget that our world is full of amazing natural places that are often stranger than fiction".

This AI revolution is seemingly having real-world consequences, with Nikon Peru adding that "millions of people around the world are generating surreal images just by entering a few keywords on a website, which is directly affecting photographers, especially in places with fewer resources". Those places include Latin America, where editorial and advertising photographers are "losing space, work and profits", Nikon adds.

The AI backlash begins

The flipside to Nikon's argument is that AI image generators – particularly those that are trained on photography that's been licensed from creators, like Adobe Firefly – are opening up dramatic landscape 'photography' to new audiences who can't afford to travel to remote places with expensive camera gear.

But Nikon clearly feels that, rather than co-existing with traditional photography, AI image generators could significantly hit the demand for real-world photography, and it's seen this happen already.

However you feel about AI image generation, Nikon's campaign is the first significant backlash from one of the major camera manufacturers – and is a nicely executed one, too. For example, a photo of Iceland's Reynisfjara beach (above) is given the mock AI prompt "a realistic Minecraft cliff at the seashore in winter season".

In another photo, which you can see higher up the page, the Coyote Buttes cliffs in Arizona, USA are described in their AI prompt as "a latte skatepark in the middle of the desert in Mars".

Of course, AI and cameras also aren't necessarily polar opposites – there are several examples of the ways AI is transforming how traditional cameras work, from increasingly smart autofocus to improved image processing. So the future of photography is perhaps one of peaceful co-existence between the two tools, rather than deathmatch between Midjourney and Nikon.

from TechRadar: Photography & video capture news https://ift.tt/wI2i6aW

via IFTTT